Previous – On a quest of reducing Jenkins build time – Part 1.

With all our efforts we had managed to get our build pipeline time down to under 12 minutes. Our quest still continues…

We were using cvs and our build job was taking couple of minutes just to determine the change log. The next target was to check if migrating from cvs to svn would help us reduce our build times further.

Matthew Meyers (our infrastructure guru) who works at IDeas Inc, had setup a cool Jenkins infrastructure in IDeaS Data Center for our next generation product. While having a conversation with him he suggested that we could do an experiment of migrating the local Jenkins infrastructure to data center and also migrating from cvs to svn to check if it helps us further reduce our build times.

Matthew created a dedicated Jenkins Slave for this effort, migrated cvs to svn and did the setup of this parallel experimental infrastructure. After some initial hiccups, we got all the tests running fine and we were delighted to see that the total build pipeline time had reduced down to less than 8 minutes. SVN migration, bigger better machines, SAN infrastructure had helped reduce the build timings.

We finalized a date when we would cut over to this new infrastructure. The cut over went through fine too. Now we have a nice consolidate Jenkins infrastructure in our data center.

This migration had its own share of new learning…

robocopy/rsync.

Matthew introduced me to robocopy. Robocopy is so cool. Earlier we were using simple copy command to copy workspace, database and files in general. Robocopy has a feature of mirroring files in the source folder to destination folder and the cool thing is that it can automatically skip copying files that are not modified. This feature helps in saving lot time while doing file operations.

This ability helped us in adding two more test jobs 1) REST Test 2) Business Driven Tests in our build pipeline which we could not do earlier as both the jobs required us to deploy the application and have a larger pre-populated database to carry out our tests, which earlier was a time consuming activity.

Could not reserve enough space for object heap

As we added two more test jobs to run in parallel, the jobs started failing with error “Could not reserve enough space for object heap“. So far I had faced this issue when I exceed the -Xmx<size> (set maximum Java heap size memory) limit for a 32 bit process. In this case it was a 64 bit process, we had 32 GBs of ram available and while the jobs were running I could see ample memory space still available on the box.

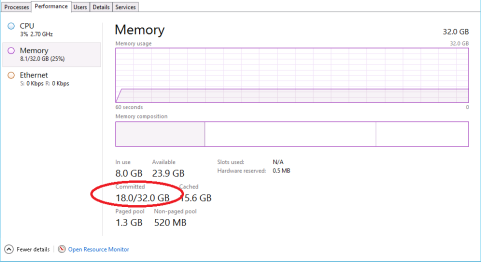

After spending couple of hours googling and observing the task manager while the jobs were running, I learnt something new. The task manager has a section called as “Committed Memory”

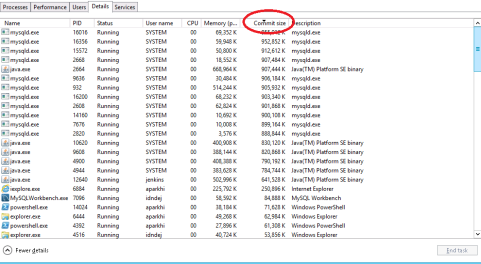

I observed that though the machine was showing lot of free memory available, I was getting the memory error whenever the committed memory was crossing the physical memory threshold of 32Gbs. You can see the actual memory consumed and the committed memory per process in the task manager.

Using the task manager, I found that all the java processes and some mysql processes were showing that most of them were consuming 2+ GBs of committed memory. After reducing the value of -Xmx, the committed memory on the java processes went down.

After reducing the size of mysql variable key_buffer_size, the committed memory for mysql processes went down.

Finally, after bringing down the committed memory size, all the jobs started running in parallel without any issue.

Now even after adding two more test jobs, our build pipeline time is down to 10 minutes.

Previous – On a quest of reducing Jenkins build time – Part 1.